There was a nice piece on the online FT on the forces impacting the reinsurance sector last night. Lancashire, which is behaving oddly these days, was one of the firms mentioned. Lancashire looks like its set to drop by approximately 12% (the amount of the special dividend) when it goes ex-dividend after today the 28th (although yahoo has been shown it dropping by 10%-12% at the end of trading for several days now, including yesterday). If it does drop to a £5.50 level, that’s approximately a 123% price to diluted tangible book value. Quite a come down from the loftier valuations of 150%-170% under previous CEO Richard Brindle!

Anyway, this post is not about that. A major part of modern risk management in the insurance sector today is applying real life scenarios to risk portfolios to assess their impact. Lloyds’ has being doing it for years with their realistic disaster scenarios (RDS). Insurers are adept at using scenarios generating by professional catastrophic models from firms like RMS and AIR on so-called peak zones like US hurricanes or Japan earthquake. Many non-peak scenarios are not explicitly modelled by such firms.

The horrors of the tsunamis from the 2011 Tōhoku and the 2004 Indian Ocean earthquakes have been brought home vividly in this multi-media age. The damage in human terms from the receding waters full of debris makes the realities of such events all too real. Approximately 80% of tsunamis come from earthquakes and history is littered with examples of large destructive tsunami resulting from earthquakes – the 1755 Great Lisbon earthquake in Portugal, the 1783 Calabrian and the 1908 Messina earthquakes in Italy, the 1896 Sanriku earthquake in Japan, the recently discovered 365 AD Mediterranean quake, the 1700 Cascadia Megathrust earthquake in the west coast of the US, and the 1958 Lituya Bay quake in Alaska are but a few examples.

Volcanoes are another potential cause of mega tsunamis as many volcanoes are found next to the sea, notably in countries bordering the Pacific Ocean, the northern Mediterranean and the Caribbean Sea. One scenario put forward by a paper from Steven Ward and Simon Day in 2001 is the possibility of a mega tsunami from a collapse of an unstable volcanic ridge caused by previous Cumbre Vieja volcanoes in 1949 and 1971 in La Palma in the Canary Islands. The threat was has been dramatically brought to life by a 2013 BBC Horizon programme called “Could We Survive A Mega-Tsunami?”. Unfortunately I could not find a link to the full programme but a taster can be found here.

The documentary detailed a scenario where a future eruption could cause a massive landslide of 500 km3 of rock crashing into the sea, causing multiple waves that would travel across the Atlantic Ocean and devastate major cities along the US east coast, as well as parts of Africa, Europe and southern England & Ireland. The damage would be unimaginable, causing over 4 million deaths and economic losses of over $800 billion. The impact of the damage on port and transport infrastructure would also result in horrible after event obstacles to rescue and recovery efforts.

The possibility of such a massive landslide resulting from a La Palma volcano has been disputed by many scientists. In 2006, Dutch scientists released research which stipulated that the south west flank of the island was stable and unlikely to fall into the sea for at least another 10,000 years. More recent research in 2013, has shown that 8 historical landslides associated with volcanoes in the Canary Islands have been staggered in discrete landslides and that the likelihood of one large 500 km3 landslide is therefore extremely remote. The report states:

“This has significant implications for geohazard assessments, as multistage failures reduce the magnitude of the associated tsunami. The multistage failure mechanism reduces individual landslide volumes from up to 350 km3 to less than 100 km3. Thus although multistage failure ultimately reduce the potential landslide and tsunami threat, the landslide events may still generate significant tsunamis close to source.”

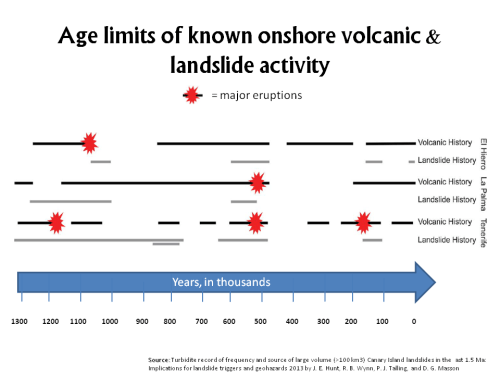

Another graph from the research shows that timeframe over which such events should be viewed is in the thousands of years.

Whatever about the feasibility of the events dramatised in the BBC documentary, the scientists behind the latest research do highlight the difference between probability of occurrence and impact upon occurrence.

“Although the probability of a large-volume Canary Island flank collapse occurring is potentially low, this does not necessarily mean that the risk is low. Risk is dependent both on probability of occurrence and the resultant consequences of such events, namely generation of a tsunami(s). Therefore, determining landslide characteristics of past events will ultimately better inform tsunami modelling and risk assessments.”

And, after all, that’s what good risk management should be all about. Tsunami are caused by large infrequent events so, as with all natural catastrophes, we should be wary that historical event catalogues may be a poor guide to future hazards.