I thought it was time for a quick catch up on all things reinsurance and specialty insurance since my last post a year ago. At that time, it looked like the underlying rating environment was gaining momentum and a hoped-for return to underwriting profitability looked on the cards. Of course, since then, the big game changer has been COVID-19.

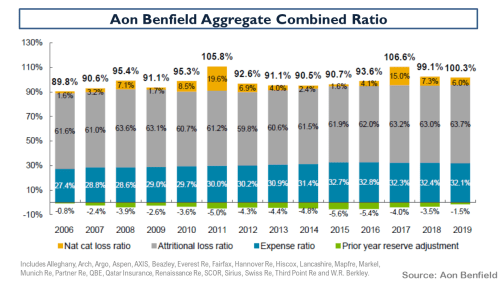

A quick catch-up on the 2019 results, as below, from the Aon Reinsurance Aggregate (ARA) results of selected firms illustrates the position as we entered this year. It is interesting to note that reserve releases have virtually dried up and the 2019 accident year excluding cats is around 96%.

The Willis Re subset of aggregate results is broadly similar to the ARA (although it contains a few more of the lesser players and some life reinsurers and excludes firms like Beazley and Hiscox) and it shows that on an underling basis (i.e. accident year with normalised cat load), the trend is still upwards and more rate improvement is needed to improve attritional loss ratios.

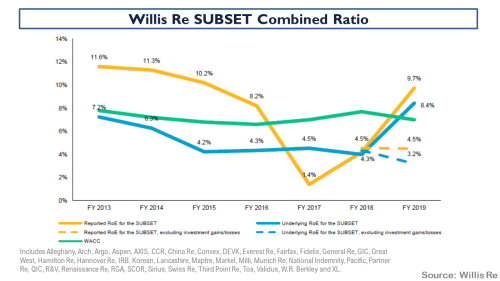

The breakdown of the pre-tax results of the ARA portfolio, as below, shows that investment returns and gains saved the day in 2019.

The ROE’s of the Willis portfolio when these gains were stripped out illustrates again how underwriting performance needs to improve.

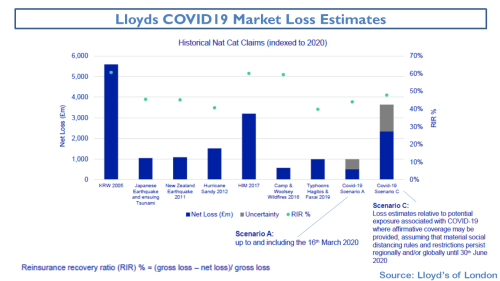

Of course, COVID-19 has impacted the sector both in terms of actual realised losses (e.g. event cancellations) and with the cloud of uncertainty over reserves for multiple exposures yet to be fully realised. There remains much uncertainty in the sector about the exact size of the potential losses with industry estimates ranging widely. Swiss Re recently put the figure at between $50-80 billion. To date, firms have established reserves of just over $20 billion. One of the key uncertainties is the potential outcome of litigation around business interruption cover. The case brought in the UK by the FCA on behalf of policyholders hopes to expedite lengthy legal cases over the main policy wordings with an outcome expected in mid-September. Lloyds industry insurance loss estimate is within the Swiss Re range and their latest June estimate is shown below against other historical events.

I think Alex Maloney of Lancashire summarised the situation well when he said that “COVID-19 is an ongoing event and a loss which will take years to mature”, adding that for “the wider industry the first-party claims picture will not be clear until 2021”. Evan Greenberg of Chubb described the pandemic as “a slow rolling global catastrophe impacting virtually all countries, unlike other natural catastrophes it has no geographic or time limits and the event continues as we speak” and predicted that “together the health and consequent economic crisis will likely produce the largest loss in insurance history, particularly considering its worldwide scope and how both sides of the balance sheet are ultimately impacted”.

The immediate impact of COVID-19 has been on rates with a significant acceleration of rate hardening across most lines of business, with some specialty lines such as certain D&O covers have seen massive increases of 50%+. Many firms are reporting H1 aggregate rate increases of between 10% to 15% across their diversified portfolios. Insurance rate increases over the coming months and reinsurance rates at the January renewals, assuming no material natural cats in H2 2020, will be the key test as to whether a true hard market has arrived. Some insurers are already talking about increasing their risk retentions and their PMLs for next year in response to reinsurance rate hardening.

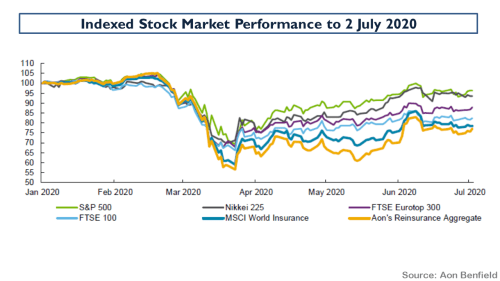

Valuations in the sector have taken a hit as the graph below from Aon on stock performance shows.

Leaving the uncertainty around COVID-19 to one side, tangible book multiples amongst several of my favourite firms since this March 2018 post, most of whom have recently raised additional capital in anticipation of a broad hard market in specialty insurance and reinsurance market, look tempting, as below.

The question is, can you leave aside the impact of COVID-19? That question is worthy of some further research, particularly on the day that Hiscox increased their COVID-19 reserves from $150 million to $230 million and indicated a range of a £10 million to £250 million hit if the UK business interruption case went against them (the top of the range estimate would reduce NTAs by 9%).

Food for thought.